A quick recap on generative AI and broad LLMs

Generative AI uses large language models (LLM) to learn to guess the next word in a sequence.

- Large language models contain essentially all written human knowledge

- The sheer volume of data makes generative AI very accurate in plain English content creation (i.e. “write me a marketing email to optimize click rates”)

- It works by using the “context” given by the user and then trying to predict the next word by learning patterns in the training data.

Broad LLMs are very good for simple tasks, but less so for specific domains. Instead, Retrieval Augmented Generation (RAG) / Smart Retriever and Fine Tuned Models are needed for better answers in finance.

- Most knowledge GPT learned from is not finance-related (solution - fine-tuned models)

- Training data gets stale very quickly (solution - retrieval augmented generation)

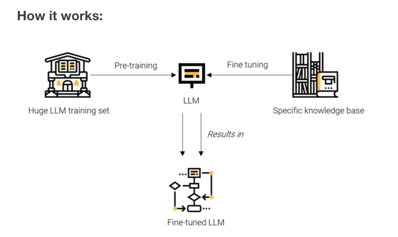

Fine-tuned models

Fine-tuned models are pre-trained and then adjusted with additional training on a specific, smaller dataset.

How it produces better answers in finance: Feed the trained model a specific data set (like finance) and it can optimize to answering domain-specific questions.

Retrieval Augmented Generation (RAG) / Smart Retriever

RAG/Smart Retrievers ensure the LLM references a particular knowledge base outside of its training data before generating a response.

How it produces better answers in finance: If you can feed the LLM the correct information (i.e. a recent 10k) then you can solve the problem of the training data being stale

The best use case for LLMs is task automation and Alfa does just that

AI is continuing to see improvements in:

- Basic reasoning - ability to infer facts using preexisting data

- Access to all human knowledge + very good recall - ability to reference all documented knowledge

- Tireless work - ability to scale up or down to accommodate increased workloads

This leads to the conclusion that the best current use of the technology is to be very “task” oriented. Ensure the LLM has access to the correct data and is trained correctly to do highly customized tasks.